Case Study - Cloud-native applications

Contents

Cloud-native applications whiteboard design session student guide

Abstract and learning objectives

In this case study, you will learn about the choices related to building and deploying containerized applications in Azure, critical decisions around this, and other aspects of the solution, including ways to lift-and-shift parts of the application to reduce applications changes.

By the end of this case study you will be better able to design solutions that target Azure Kubernetes Service (AKS) and define a DevOps workflow for containerized applications.

Step 1: Review the customer case study

Outcome

Analyze your customer’s needs.

Customer situation

Fabrikam Medical Conferences provides conference web site services tailored to the medical community. They started out 10 years ago building a few conference sites for a small conference organizer. Since then, word of mouth has spread, and Fabrikam Medical Conferences is now a well-known industry brand. They now handle over 100 conferences per year and growing.

Medical conferences are typically low budget web sites as the conferences are usually between 100 to only 1500 attendees at the high end. At the same time, the conference owners have significant customization and change demands that require turnaround on a dime to the live sites. These changes can impact various aspects of the system from UI through to the back end, including conference registration and payment terms.

The VP of Engineering at Fabrikam, Arthur Block, has a team of 12 developers who handle all aspects of development, testing, deployment, and operational management of their customer sites. Due to customer demands, they have issues with the efficiency and reliability of their development and DevOps workflows.

The conference sites are currently hosted on-premises with the following topology and platform implementation:

-

The conference web sites are built with the MEAN stack (Mongo, Express, Angular, Node.js).

-

Web sites and APIs are hosted on Windows Server machines.

-

MongoDB is also running on a separate cluster of Windows Server machines.

Customers are considered “tenants”, and each tenant is treated as a unique deployment whereby the following happens:

-

Each tenant has a database in the MongoDB cluster with its own collections.

-

A copy of the most recent functional conference code base is taken and configured to point at the tenant database.

- This includes a web site code base and an administrative site code base for entering conference content such as speakers, sessions, workshops, and sponsors.

-

Modifications to support the customer’s styles, graphics, layout, and other custom requests are applied.

-

The conference owner is given access to the admin site to enter event details.

-

They will continue to use this admin site each conference, every year.

-

They have the ability to add new events and isolate speakers, sessions, workshops, and other details.

-

-

The tenant’s code (conference and admin web site) is deployed to a specific group of load balanced Windows Server machines dedicated to one or more tenant. Each group of machines hosts a specific set of tenants, and this is distributed according to scale requirements of the tenant.

-

Once the conference site is live, the inevitable requests for changes to the web site pages, styles, registration requirements, and any number of custom requests begin.

Arthur is painfully aware that this small business, which evolved into something bigger, has organically grown into what should be a fully multi-tenanted application suite for conferences. However, the team is having difficulty approaching this goal. They are constantly updating the code base for each tenant and doing their best to merge improvements into a core code base they can use to spin up new conferences. The pace of change is fast, the budget is tight, and they simply do not have time to stop and restructure the core code base to support all the flexibility customers require.

Arthur is looking to take a step in this direction with the following goals in mind:

-

Reduce regressions introduced in a single tenant when changes are made.

-

One of the issues with the code base is that it has many dependencies across features. Seemingly simple changes to an area of code introduce issues with layout, responsiveness, registration functionality, content refresh, and more.

-

To avoid this, he would like to rework the core code base so that registration, email notifications and templates, content and configuration are cleanly separated from each other and from the front end.

-

Ideally, changes to individual areas will no longer require a full regression test of the site; however, given the number of sites they manage, this is not tenable.

-

-

Improve the DevOps lifecycle.

-

The time it takes to onboard a new tenant, launch a new site for an existing tenant, and manage all the live tenants throughout the lifecycle of the conference is highly inefficient.

-

By reducing the effort to onboard customers, manage deployed sites, and monitor health, the company can contain costs and overhead as they continue to grow. This may allow for time to improve the multi-tenant platform they would like to build for long-term growth.

-

-

Increase visibility into system operations and health.

- The team has little to no aggregate views of health across the web sites deployed.

While multi-tenancy is a goal for the code base, even with this in place, Arthur believes there will always be the need for custom copies of code for a particular tenant who requires a one-off custom implementation. Arthur feels that Docker containers may be a good solution to support their short-term DevOps and development agility needs, while also being the right direction once they reach a majority multi-tenant application solution.

Customer needs

-

Reduce the overhead in time, complexity, and cost for deploying new conference tenants.

-

Improve the reliability of conference tenant updates.

-

Choose a suitable platform for their Docker container strategy on Azure. The platform choice should:

-

Make it easy to deploy and manage infrastructure.

-

Provide tooling to help them with monitoring and managing container health and security.

-

Make it easier to manage the variable scale requirements of the different tenants, so that they no longer have to allocate tenants to a specific load balanced set of machines.

-

Provide a vendor neutral solution so that a specific on-premises or cloud environment does not become a new dependency.

-

-

Migrate data from MongoDB on-premises to CosmosDB with the least change possible to the application code.

-

Continue to use Git repositories for source control and integrate into a CICD workflow.

-

Prefer a complete suite of operational management tools with:

-

UI for manual deployment and management during development and initial POC work.

-

APIs for integrated CICD automation.

-

Container scheduling and orchestration.

-

Health monitoring and alerts, visualizing status.

-

Container image scanning.

-

-

Complete an implementation of the proposed solution for a single tenant to train the team and perfect the process.

Customer objections

-

There are many ways to deploy Docker containers on Azure. How do those options compare and what are motivations for each?

-

Is there an option in Azure that provides container orchestration platform features that are easy to manage and migrate to, that can also handle our scale and management workflow requirements?

Infographic for common scenarios

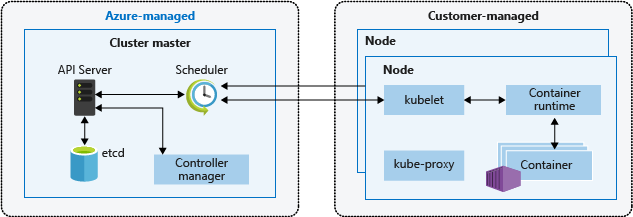

Kubernetes Architecture

Note: This diagram is an illustration of the Kubernetes topology, illustrating the master nodes managed by Azure, and the agent nodes where Customers can integrate and deploy applications.

https://docs.microsoft.com/en-us/azure/aks/intro-kubernetes

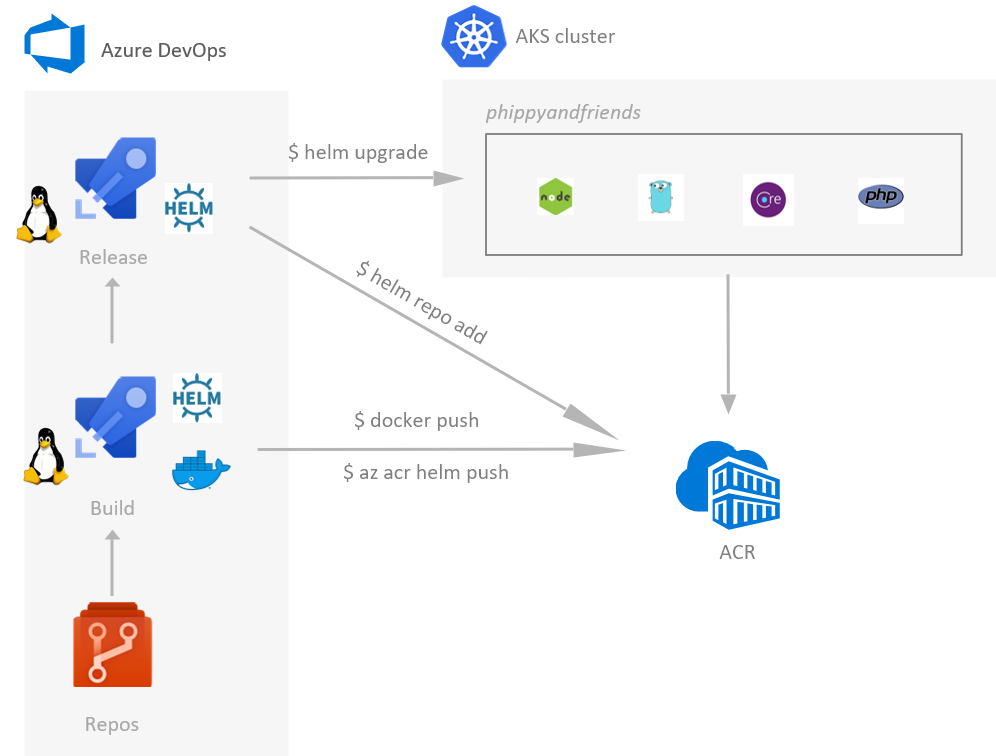

CICD to Azure Kubernetes Service with Azure DevOps

Step 2: Design a proof of concept solution

Outcome

Design a solution and prepare to present the solution.

Business needs

Directions: Answer the following questions:

-

Who should you present this solution to? Who is your target customer audience? Who are the decision makers?

-

What customer business needs do you need to address with your solution?

Design

Directions: Respond to the following questions:

High-level architecture

-

Based on the customer situation, what containers would you propose as part of the new microservices architecture for a single conference tenant?

-

Without getting into the details (the following sections will address the particular details), diagram your initial vision of the container platform, the containers that should be deployed (for a single tenant), and the data tier.

Choosing a container platform on Azure

-

List the potential platform choices for deploying containers to Azure.

-

Which would you recommend and why?

-

Describe how the customer can provision their Azure Kubernetes Service (AKS) environment to get their POC started.

Containers, discovery, and load balancing

-

Describe the high-level manual steps developers will follow for building images and running containers on Azure Kubernetes Service (AKS) as they build their POC. Include the following components in the summary:

-

The Git repository containing their source.

-

Docker image registry.

-

Steps to build Docker images and push to the registry.

-

Run containers using the Kubernetes dashboard.

-

-

What options does the customer have for a Docker image registry and container scanning, and what would you recommend?

-

How will the customer configure web site containers so that they are reachable publicly at port 80/443 from Azure Kubernetes Service (AKS)?

-

Explain how Azure Kubernetes Service (AKS) can route requests to multiple web site containers hosted on the same node at port 80/443

Scalability considerations

- Explain to the customer how Azure Kubernetes Service (AKS) and their preconfigured Scale Sets support cluster auto-scaling.

Automating DevOps workflows

-

Describe how Azure DevOps can help the customer automate their continuous integration and deployment workflows and the Azure Kubernetes Service (AKS) infrastructure.

-

Describe the recommended approach for keeping Azure Kubernetes Service (AKS) nodes up to date with the latest security patches or supported Kubernetes versions.

Prepare

-

Identify any customer needs that are not addressed with the proposed solution.

-

Identify the benefits of your solution.

-

Determine how you will respond to the customer’s objections.

Additional references

| Description | Links |

| Azure Kubernetes Services (AKS) | https://docs.microsoft.com/en-us/azure/aks/intro-kubernetes/ |

| Kubernetes | https://kubernetes.io/docs/home/ |

| AKS FAQ | https://docs.microsoft.com/en-us/azure/aks/faq |

| Autoscaling AKS | https://github.com/kubernetes/autoscaler |

| AKS Cluster Autoscaler | https://docs.microsoft.com/en-us/azure/aks/cluster-autoscaler |

| Upgrading an AKS cluster | https://docs.microsoft.com/en-us/azure/aks/upgrade-cluster |

| Azure Pipelines | https://docs.microsoft.com/en-us/azure/devops/pipelines/ |

| Container Security | https://docs.microsoft.com/en-us/azure/container-instances/container-instances-image-security/ |

| Image Quarantine | https://github.com/Azure/acr/tree/master/docs/preview/quarantine/ |

| Container Monitoring Solution | https://docs.microsoft.com/en-us/azure/azure-monitor/insights/containers |