Case Study: High Performance Computing

Contents

High Performance Computing whiteboard design session student guide

Abstract and learning objectives

In this Case Study, you will work with a group to design a scale-out media processing solution using High Performance Computing (HPC) techniques in Azure.

At the end of this Case Study, you will be better able to design and recommend High Performance Computing solutions that are highly scalable and can be configured through declarative means as opposed to large amounts of complicated application code. You will also learn how to manage and monitor these solutions to ensure predictable outcomes effectively.

Step 1: Review the customer case study

Outcome

Analyze your customer’s needs.

Customer situation

ThoughtRender provides image and video processing services to many industries; marketing and advertising, retail, medical, and media and entertainment. You know those furniture magazines you love to look at? Most of the pictures in these magazines are generated by the HPC compute clusters at ThoughtRender. Their unique ability to tie together industry solutions into a reliable service for their customers (e.g., furniture magazine designers! 3D animated movie makers!), together with their in-house knowledge on image and video, is a quality service that sets them apart from competitors.

ThoughtRender currently operates their own, on-premises services, with their own on-premises HPC clusters and other IT infrastructure, but their success has led to challenges in terms of their growth, and ability to scale to new jobs, and new industries. They are curious about cloud and think that not only could it help them scale, but help them improve costs and pass savings to customers, address the seasonality of rendering demand (by only paying for resources when actually used) and to take advantage of new technologies, such as the latest GPUs.

Customers often ask questions like, “Could you get that job processed this week, instead of in 3 weeks?” ThoughtRender thinks that perhaps bursting jobs to the cloud could help them deliver bigger jobs, or regular jobs quicker for their customers (e.g., in 1 day, instead of 5 days). They intend to pilot a solution to address this.

They also want to consider how to integrate with their on-premises infrastructure and operating model. They have three large on-premises HPC clusters, one in each site, in London, New York, and Singapore. They also have labs in each site that provide high-end visualization workstations for quality control, mostly operated by internal staff, but sometimes together with customers. ThoughtRender have 3 petabytes (PB) of data (customer assets and working “scratch” data shares), 1 PB typically stored per site.

Thomas Pix, CIO of ThoughtRender, is looking to modernize their story. He would love to understand how to tap into the "power of the cloud" to be more flexible to customer demands, but also to consider new ways of working. Lots of data is generated via their on-premises HPC clusters, which also spends a lot of time moving between sites. He would love to see if they can move this data away from their on-premises datacenter into the cloud and enhance their ability to load, process, and analyze it going forward. Given his long-standing relationship with Microsoft, he would like to see if Azure can meet his needs.

Customer needs

-

Want to be able to provide better flexibility to their customer demands (i.e., to have more work processed, or to complete work in a faster time).

-

Want to investigate potential advantages to using the cloud (e.g., latest technologies, bursting capability, cost savings).

-

Want to integrate with their on-premises systems where possible (e.g., to burst to the cloud for additional capacity).

-

Want to collaborate and visualize the results of their work, on their own, or together with customers.

Customer objections

-

Will Azure give us control to schedule jobs when we want?

-

Will the capacity be available on Azure when we want it?

-

We heard Microsoft does Linux now. But how true is this? Will it work with our chosen Linux version?

-

We have petabytes of data on-premises. It would cost us a fortune and take ages to move this to the cloud!

-

We heard that collaboration is possible for 3D imaging workstations, but we have very specific color requirements and buy top-end workstation equipment for our users. Our users just wouldn't get the interaction performance they require with something "remote" in the cloud.

-

Will this take jobs away from our IT system administrators and HPC engineers?

Infographic for common scenarios

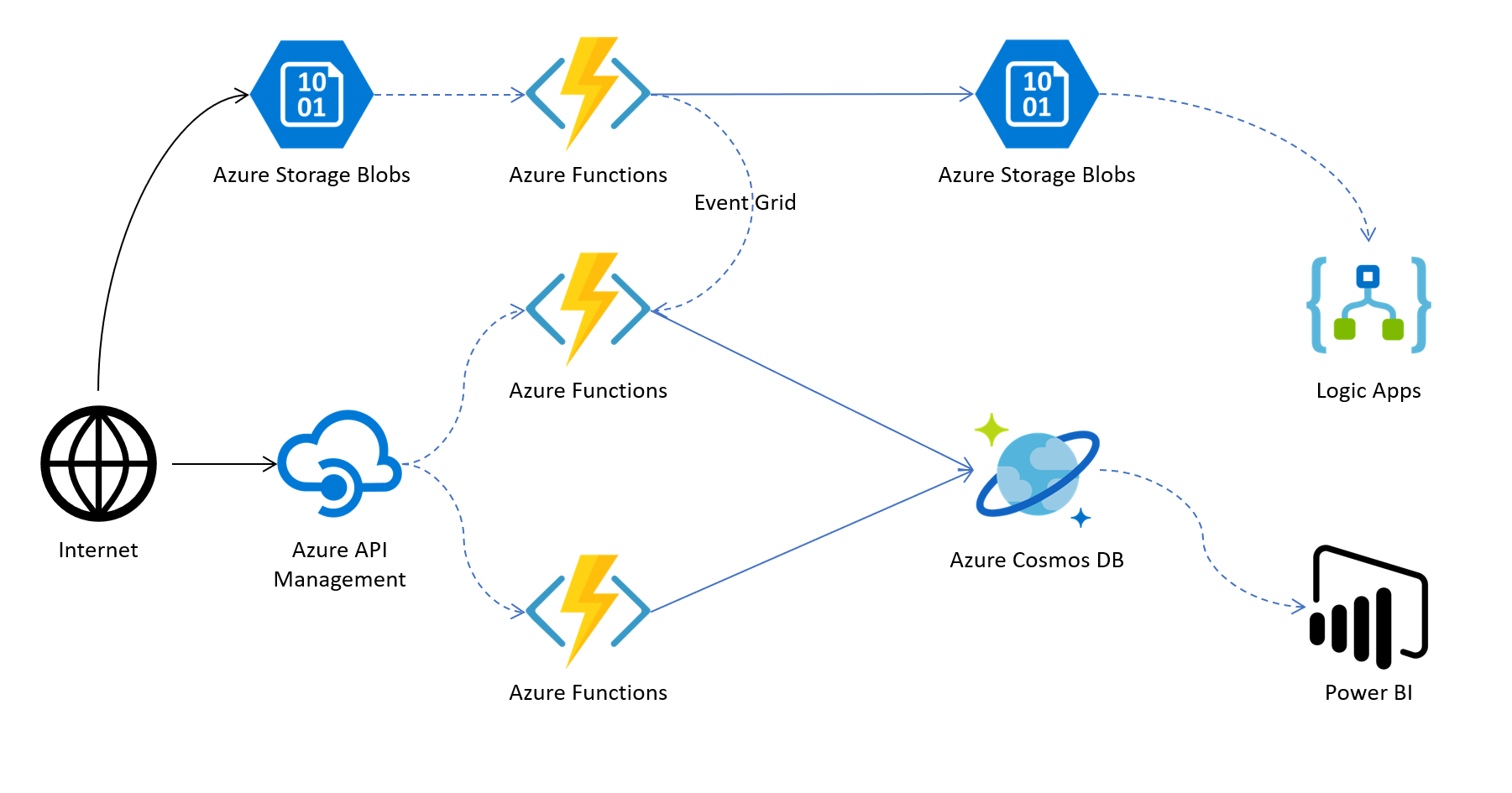

High-performance computing (HPC) applications can scale to thousands of compute cores, extend on-premises compute, or run as a 100% cloud-native solution. This HPC solution is implemented with Azure Batch, which provides job scheduling, auto-scaling of compute resources, and execution management as a platform service (PaaS) that reduces HPC infrastructure code and maintenance.

https://azure.microsoft.com/en-us/solutions/architecture/hpc-big-compute-saas/

Step 2: Design a proof of concept solution

Outcome

Design a solution.

Business needs

Directions: Answer the following questions and list the answers on a flip chart:

-

Who should you present this solution to? Who is your target customer audience? Who are the decision makers?

-

What customer business needs do you need to address with your solution?

Design

Directions: Respond to the following questions:

High-level architecture

- Without getting into the details (the following sections will address the particular details), diagram your initial vision for handling the top-level requirements for data loading, data preparation, storage, machine learning modeling, and reporting. You will refine this diagram as you proceed.

Data loading

-

How would you recommend ThoughtRender get their data into (and out of) Azure? What services would you suggest and what are the specific steps they would need to take to prepare the data, to transfer the data, and where would the loaded data land?

-

Update your diagram with the data loading process with the steps you identified.

Video and Image Processing

-

What software tools will be used here? Are they commercial, or Open Source, or a combination of both? Will ThoughtRender need to throw away the tools they are already using, or are some integrations possible?

-

For Linux based tools, does it matter which flavor of Linux is chosen?

-

When are these tools used at ThoughtRender? Are they part of a workflow?

Batch Computing

-

What technology would you recommend ThoughtRender use for implementing their rendering compute workloads in Azure?

-

Are there particular types of compute instances you would guide ThoughtRender to use?

-

Are compute-intensive, memory-intensive, disk-intensive, or network-optimized instances needed?

-

Are GPU based instances needed?

-

How would you guide ThoughtRender to load data so it can be processed by the rendering compute workload?

-

How will this data be used at the beginning, middle, and end of a compute workload?

-

Where will this data be stored?

-

Will this data be stored on compute instances during a batch run? Would you store data on each compute node working in a batch, or would you store data in a shared area?

-

What sort of performance will be required from this storage?

-

Will this data need to be backed up or archived?

Operationalizing and Integrating

-

Is it possible for ThoughtRender to connect their Batch Rendering workloads in Azure, to their Rendering workloads on-premises, in their various sites? If so, will the connection be made at a networking level, an operating system level, or an application level?

-

Is it possible for ThoughtRender to keep their Azure infrastructure separate (i.e., completely unconnected) to their on-premises HPC clusters?

Visualization and Remote Workstations

-

Are special types of compute instances needed for remote workstations in Azure?

-

Is a special type of software required for client access? Could users simply use remote desktop? Would this perform the way ThoughtRender (or their customers) would like it to?

-

Would it be secure?

-

Would it be color correct?

-

Would it perform?

-

Would it allow collaboration or interactivity?

Additional references

| Description | Links |

| HPC Solution Architectures | https://azure.microsoft.com/en-us/solutions/high-performance-computing/#references/ |

| Rendering on Azure | https://rendering.azure.com/ |

| Azure Batch | https://docs.microsoft.com/en-us/azure/batch/ |

| Batch Shipyard | https://github.com/Azure/batch-shipyard/ |

| Batch CLI extensions | https://github.com/Azure/azure-batch-cli-extensions/ |

| Batch Explorer | https://github.com/Azure/BatchExplorer/releases/ |

| AzCopy (Linux) | https://docs.microsoft.com/en-us/azure/storage/storage-use-azcopy-linux/ |

| Azure Batch Rendering Service | https://docs.microsoft.com/en-us/azure/batch/batch-rendering-service/ |

Supplemental Materials

The following projects may be helpful to you after completing the workshop in understanding other ways in which Azure Batch can be applied, besides media and rendering.

| Description | Links |

| CycleCloud Lab | https://github.com/azurebigcompute/BigComputeLabs/tree/master/CycleCloud/ |

| Do Azure Parallel R Package | https://github.com/Azure/doAzureParallel/ |

| Big Compute Bench VM | https://github.com/mkiernan/bigcomputebench/ |